Oct 19, 2024

This article, unlike the others on this website, is not about how some AI algorithms work. Instead, it is a personal opinion on AI and the future of our world. My hope is to generate more discussions on AI from this perspective.

In such an undertaking, it is likely that I have made mistakes and failed to consider some critical aspect of the whole picture. Please feel free to comment and help me learn more.

After some false starts, we are witnessing the true dawn of Artificial Intelligence (AI) today. Many people, including high profile entrepreneurs and researchers, are of the view that AI is on a trend to surpass human intelligence. This leads to different opinions on what impact it will have on the future of humanity.

- Doomsayers like Elon Musk and Geoffrey Hinton believe that AI can dominate our species and take over the world (think The Terminator and 2001: A Space Odyssey).

- Optimists like Mark Zuckerberg and Marc Andreessen consider it a silver bullet and a necessary step towards an advanced civilization (think … no blockbuster? Does fear sell better than hope?).

- A third view is that the future is going to be somewhere in the middle, just like most other innovations in life. AI will solve some of our problems well and humans will keep their dominance in others.

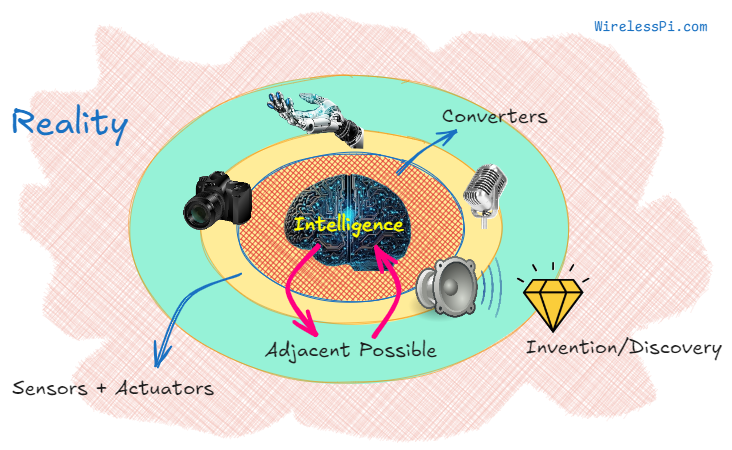

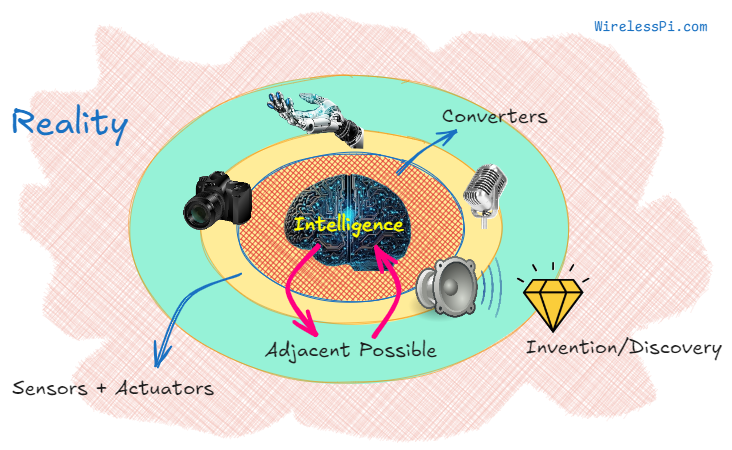

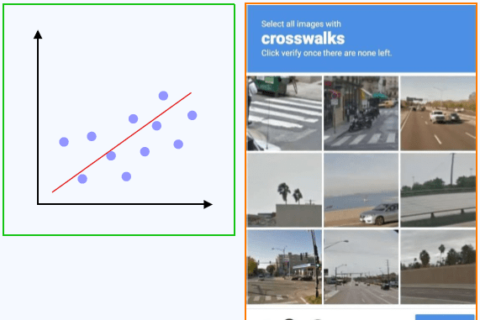

Why do we have such a wide spectrum of predictions for the same technology? To answer this question, consider the figure below.

Guessing the name of the animal and filling in the blanks just came to you naturally. In other matters too, a human mind works with associations and fills in lots of blanks itself. So when we hear the words artificial and intelligence, associated ideas naturally pop up in our heads, including the ones that are not necessarily there. For example:

- More intelligence automatically implies more power to influence the environment, even when this ability is limited in reality.

- Or a superior intelligence is bound to define an ultimate purpose, in the service of which it might dominate the humans.

- Or it would solve all our major problems, like a lost child whose worries end after holding the finger of the accompanying adult in a crowded shopping mall.

Obviously with enough imagination, dots can be connected between any two points.

With the exception of technical presentations and publications, many articles on AI portray a picture that is a reflection of the writer’s imagination instead of reality. I will explain this issue from the eyes of a child who is observing our scientific progress from outer space, gazing down at our blue-and-white marble of a planet for some time.

Table of Contents

1. The Rise of Artificial Intelligence

1.2 The Greatest Invention Never Mentioned

2.1 Artificial Neural Networks

2.2 Knobs and Dials

3. Roadblocks to General Intelligence

4. The Curious Case of Disappearing Inventions

5. Silicon Valley’s Ultimate Bet

6. The Inconvenient Truth Behind AI

6.1 The 3rd Pillar

The Rise of Artificial Intelligence

To foresee where AI is headed, it is imperative to know where it came from. There are three enabling technologies behind the rise of AI.

Life in the Inanimate

Humans have always shown a keen interest in the art of making inanimate objects appear to move. Contact forces such as a person using a tool or a pair of oxen plowing the field were all too simple. The intriguing case was that of non-contact forces like gravity, electricity and magnetism where the cause is not immediately clear from the effect (even today a swing moving itself late in the night is a key scene in horror movies).

Perhaps those non-contact forces are like the whispers of existence, nudging the inanimate towards life. In particular, the control of electric potential turned out to be a groundbreaking discovery and an electric motor appeared like the magical wizard of the engineering world. It is said that Mary Shelley knew the concept of revitalization, stemming from Galvani’s 1771 experiments where the legs of a dead frog twitched due to electric current, when she wrote the novel Frankenstein in 1819.

From Victor Frankenstein. Image Copyright: 20th Century Fox

The applications of electricity just kept growing with time from telegraph and telephone to light bulb, motion picture and ultimately the transistor. Finally, integration of transistors on a chip forming the now ubiquitous processor that implements mathematical algorithms led us to artificial intelligence.

But where did these mathematical algorithms come from?

The Greatest Invention Never Mentioned

If you Google the world’s greatest inventions, or read any book written on this topic, you will see a list including

- physical entities such as fire, wheel, printing press, light bulb, computer, Internet, penicillin, etc., and

- conceptual entities like language, writing, money and the number zero.

In my opinion, the greatest invention ever is never mentioned, without which there is no AI. When we see the number line, we do not realize that the number $1$ represents two different kinds of unity: actual and fictitious (the terms real and imaginary are used in complex numbers). An actual unity is something in the natural world that relates a symbol to a physical object one can see and touch like apples, cats and trees. On the other hand, a fictitious unity is something we imagine and use as a scale.

Someone long ago must have figured out that if discrete entities like oranges and tigers can be counted, then the height of a person or a tree can also be "counted" by treating them as a connected whole of parts, each of which has the same length. This unit length can be any arbitrary reference. For example, ancient people described length in terms of human body parts like span of a hand, arm or foot, a randomly chosen stick and now we use a standard unit known as a meter.

Once we make this conceptual jump from a real unity (like an orange) to an imagined unity (like length of a reference stick), the connection between numbers and discrete physical entities is broken. This has interesting consequences, like severing the connection between the dollar and the gold reserves.

- The fictitious unity assigns length to everything, including the hypotenuse of a triangle and the circumference of a circle. This led to the discovery of irrational numbers (and subsequently the real number line), which was impossible if there was only actual unity counting discrete objects.

- More importantly, this fictitious unity escapes the confines of length and enables us to see numbers everywhere in the cosmic realm. It helps us measure space, time, weight, speed, current, voltage, pressure, force, even color and just about everything else around us with numbers.

Numbers, numbers, everywhere

In summary, it is the fictitious unity that gives rise to all the applications of mathematics in the world and this is why I consider it the greatest invention ever. Without it, there would have been nothing, not even the zero or our mathematical universe. It is also the one in 0s and 1s of a computer.

The Magic in Silicon

It seems that the primary requirement for intelligence is superabundance of a fundamental unit with memory. Ideally, this fundamental unit should be packed within a small volume on a scale of billions at the least. In a human brain, for example, there are an astonishing $86$ billion neurons and glial cells that form the basic building block, and more than 100 trillion connections or synapses among them. We can roughly say that a human mind is a function of memory and association.

\[

\text{Mind} = f(\text{Memory, Association})

\]

In a modern computer too, there are two entities that are superabundant. First is the transistor that forms the basic building block. A modern Graphics Processing Unit (GPU) or an AI chip can have 100s of billions of transistors integrated into a tiny silicon die. The second is the clock speed in GHz that is the rate at which these transistors, acting as logical switches, can be turned on and off. By using these transistors mostly for additions, a computer can grasp the language of numbers. We can roughly say that a computer is a function of memory and addition.

\[

\text{Computer} = f(\text{Memory, Addition})

\]

Why is addition important? While multiplication and division are implemented by specialized techniques, essentially they are just additions under the hood. And even apparently complicated algorithms can be broken down to basic arithmetic operations. A processor, with an Arithmetic Logic Unit (ALU) and programming capability, is perfectly equipped for this task. Thus, a microprocessor becomes a lens in the microscope of mathematics.

Mathematics on Steroids

To get a feeling for how AI algorithms work, we have a quick overview of the artificial neural networks that can be regarded as the mathematical workhorse behind most AI applications.

Artificial Neural Networks

Artificial neural networks are computational models inspired by the structure and function of biological neural networks in the human brain. At its core is an artificial neuron which is nothing more than a little computational building block. The artificial neurons communicate through edges, which represent the connections between neurons-akin to synapses in the brain.

Signal flow: Each artificial neuron receives input signals from its connected neighbors in the form of numbers.

Processing: It applies weights to them and produces an output that can be written as

\[

y = f\left(\sum_{i=1}^{n} w_i \cdot x_i + b\right)

\]

where \(x_i\) represents the features of the input, \(y\) is the output (activation) of the neuron and \(b\) is a bias term.

Activation function $f(\cdot)$: In the above equation, \(f(\cdot)\) is the activation function that introduces non-linearity, allowing it to learn complex relationships. Some examples of activation functions are

– Sigmoid $\rightarrow$ \(f(z) = \frac{1}{1 + e^{-z}}\)

– Tanh $\rightarrow$ \(f(z) = \frac{e^z – e^{-z}}{e^z + e^{-z}}\).

– ReLU (Rectified Linear Unit) $\rightarrow$ \(f(z) = \max(0, z)\) that is the most common activation function these days.

Weights $w_i$ and bias $b$: The strength of the signal passing through each connection (edge) is determined by the weight $w_i$ computed above. These weights are adjusted during the learning process based on the error (difference between predicted and actual output) using optimization algorithms (e.g., gradient descent). The goal is to minimize a loss function (e.g., mean squared error) by updating weights iteratively.

The bias $b$ plays a role similar to the intercept $b$ in a linear equation $y=mx+b$, allowing the curve to move up and down for a better fit.

More advanced algorithms incorporate dot products of a convolution kernel with each layer’s input matrix (Convolutional Neural Networks, CNNs) or introduce loops within a layer across multiple time steps (Recurrent Neural Networks, RNNs).

Deep learning extends artificial neural networks by adding more hidden layers (usually two or more but this definition can change with time), thus creating deep neural networks. They can learn hierarchical features and represent complex patterns in data.

Knobs and Dials

These days Transformers are at the heart of modern Large Language Models (LLMs) as well as most of the other Generative AI applications since processing context-rich information makes them ideal for generation of natural language, text, image and art. But they also rely on neural networks behind the scenes. These powerful algorithms are an embodiment of mathematics running on steroids.

From the above description, we can see that AI is nothing but a certain type of number crunching. An AI scientist might feel offended on equating the lowly number crunching with highly regarded AI. Indeed, AI is very fancy number crunching with trillions of parameters computed on 1000s of GPUs operating in parallel, but it is number crunching nonetheless.

The end result is a box with trillions of knobs and dials set at optimal values as a result of training. The output it generates depends on both the input (e.g., a prompt entered in ChatGPT) and the weights and biases in all these layers.

The question is what we can do with this box.

Exciting AI Applications

While machine learning focuses on learning patterns from data sets to solve a specific problem, the applications of AI are only limited by our own imagination.

- Natural Language Processing and Generative AI for text, art, music and video are already established as a part of everyday life.

- AI can be really useful in complex decision making areas such as medical diagnosis, law and battlefields.

- Another application is machine vision for object detection and facial recognition for identification and security purposes.

- AI algorithms also act as the mind behind industrial and personal robots as well as the autonomous vehicles.

For me, the two most exciting AI applications are the following.

Education

Since time immemorial, the fundamental problem of teaching has not changed. A teacher addresses a group of students, each of whom has a different background, understanding of the foundations and intellectual capacity. So the best a teacher can do is to prepare a lecture based on the attributes of an average student.

The problem, as we can see now, is the human teacher. An AI teacher, on the other hand, can easily implement what we call the water filling algorithm in wireless communications: Similar to filling a container with water, the capacity can be maximized by allocating power to subchannels in proportion to their room for absorption.

Similarly, an AI teacher can deliver a different content to each student based on their level and ongoing progress with the ultimate purpose of bringing all of them to the same level of understanding. This could be our best attempt to reducing the wealth gap in society.

The only problem with this approach is that AI teaching is only a single irresistible step away from gamifying the education. And any nation which gamifies education might produce very learned – and very addicted – citizens.

Financial Markets and Trading

The main goal of the past alchemists, whether in China, India, Arab world or Europe, was the transmutation of base metals (e.g., lead) into gold or to find a universal elixir. The main goal of the modern alchemists today is to apply Predictive AI to financial trends (e.g., in the stock market) or to build a universal learning machine.

There will be interesting outcomes if Predictive AI becomes even better at identifying potential opportunities and adjusting portfolios in real time. Since there will be many AI entities locked in competition to outpace each other, it might become a perfect exhibition of the Red Queen effect, named after a chess piece who tells Alice in Through the Looking-Glass:

"Now, here, you see, it takes all the running you can do, to keep in the same place."

In the future, we might see the same effect in many other AI applications as well where everything is faster on an absolute scale but the same on a relative one.

Having seen the bright part of AI-assisted future, let us turn our attention towards whether a machine can match and then surpass human intelligence by learning on its own. On this route, there are some potential roadblocks that are not easy to overcome.

Roadblocks to General Intelligence

Amateurs believe that evolution is a process in which "plants and animals reproduce on a one-way route toward perfection", in words of Nassim Taleb. In a similar vein, many people today believe that the progress in AI is on a one-way route toward general intelligence. This is not necessarily true. There are certain obstacles that are conveniently overlooked in such conversations.

An Inescapable Prison

The road to artificial intelligence is built on mathematical asphalt. Many mathematicians and scientists, including past and recent Nobel laureates, express surprise on how well the mathematics represents the reality. In fact, Eugene Wigner’s 1960 essay titled The Unreasonable Effectiveness of Mathematics in the Natural Sciences is the closest thing we have to a viral article in the scientific community.

"The book of nature is written in the language of mathematics." – Galileo Galilei

"In my opinion, everything happens in nature in a mathematical way." – René Descartes

"God is a mathematician of a very high order and He used advanced mathematics in constructing the universe." – Paul Dirac

"There is only that much genuine science in any science, as it contains mathematics." – Immanuel Kant

"If you want to learn about nature, to appreciate nature, it is necessary to understand the language that she speaks in." – Richard Feynman

"The connection between mathematics and reality is a miracle, but it works." – Frank Wilczek

"It’s actually unreasonable how well mathematics works. Why should the world behave according to mathematical laws?" – Anton Zeilinger

But I think that when you enter the world of mathematics from the door of fictitious unity described in the first section above, it does not look that surprising. The fictitious unity gives rise to a corresponding real number line on which different operations can be performed. From here, certain axioms, identities and theorems emerge which may or may not represent reality. This role of mathematics through a broader lens is illustrated in the table below.

Leaving aside the trivial case (yellow box above, phenomena which do not exist in the universe and have no mathematical explanation), the other three possible cases are as follows.

Luminary case

Shown in pink above is the 1st box, the scenarios in which the world behaves according to mathematical laws. Assigning numbers to anything as well as how they change is bound to give rise to some great matches between mathematics and reality.

The luminaries quoted above commit an error by focusing on this case alone and marvel how unreasonably effective mathematics is, completely missing the remaining three cases. This is natural as when we speak from inside a box, all we see is inside the box.

Theoretical case

The 2nd box in green represents a range of mathematical theories that have no connection to reality. The proponent hopes that their theory will find a practical application one day. On occasion, it happens but not often. Also, mathematicians and scientists keep adding new theories into this box.

Nikola Tesla, arguably the greatest scientist of all time, realized it in his time when he said:

"Today’s scientists have substituted mathematics for experiments, and they wander off through equation after equation, and eventually build a structure which has no relation to reality."

Intriguing case

The 3rd box shown in blue is by far the most intriguing case. It represents those aspects of reality that have no direct mathematical underpinnings. At present, most of biology, ecology, earth science, chemistry and neuroscience are some examples, not to mention consciousness, free will and other matters of philosophy, social and political organization and a great number of unknown unknowns. As an example, On the Origin of Species published in 1859 by Charles Darwin did not contain a single mathematical equation.

Marveling on mathematical representations of some universal laws is similar to feeling surprised that one can make so many meaningful English sentences from just 26 letters. Obviously one is totally ignoring the infinite number of sentences that nhueicnj iotriur mxk’jzvkn lidmjvg* pioucjxkdfj nyhpg eytuwe qwq!!

In a parallel universe where most scientists live inside this 3rd box, a Eugene Wigner would have written a viral article titled The Reasonable Ineffectiveness of Mathematics in the Natural Sciences (and Beyond). I often think of this box as similar to standing on a beach that separates the ocean from the land. While the land appears to be the center of all activity, the ocean holds its own countless secrets, revealed only to those who dare to dive in with enough courage and imagination.

Here, I do not mean to say that mathematics is of no use. Undoubtedly, mathematics is an excellent tool for both explaining current observations and formulating predictions that dictate experimental approaches. What I am saying is that the Mathemania on which AI hype is built today is not entirely justified.

There was a time when chess and Go were considered pinnacles of human intelligence. Looking back, a good play relies on computing an optimal move in a rules-governed scenario without going through all possible combinations, a task that is perfectly suited to number crunching devices. Moreover, clear feedback is also available in the form of numbers. Therefore, defeating humans in board games says more about numerical optimization capability than the intelligence of the machine.

Real world does not have rules like board games. Most of the existence is non-linear and complex to a level that mathematical techniques quickly become insufficient or intractable. Extracting valuable feedback from the clutter is like mining uranium. An artificial neural network tries to escape these limitations by connecting an output to an input through layers steered by trillions of tunable parameters. In the end, though, does this number crunching lead to intelligence?

In my opinion, mathematics is a downstream process that gives answers and insights on existing phenomena. On the other hand, intelligence involves an upstream process that poses great questions and has the ability to explore a cause-and-effect trail. In other words, mathematics mostly explains what is there; it rarely, if ever, describes what is not there. It can tell all the fancy details about biomechanics of a horse’s joints and legs but no equation can ever take us from horses to steam engines!

The same applies to AI and computing. I have not seen a better description than the one by Jeff Hawkins when he writes in his book On Intelligence about the mind:

…, the cortex creates what are called invariant representations, which handle variations in the world automatically. A helpful analogy might be to imagine what happens when you sit down on a water bed: the pillows and any other people on the bed are all spontaneously pushed into a new configuration. The bed doesn’t compute how high each object should be elevated; the physical properties of the water and the mattress’s plastic skin take care of the adjustment automatically.

According to Ludwig Wittgenstein:

"Limits of my language are the limits of my world."

Similarly, limits of mathematics are the limits of AI. At a fundamental level, it is a prisoner of mathematics.

Interaction with Reality

When I was a child, I had three dreams that I wished would come true some day.

- To have a video call (that was the era of landlines).

- To have access to a video library where I could watch the highlights of any cricket match ever played.

- To be teleported immediately to any desired place.

As you can see, the first two are now possible while the third is not. Can we extract some general ideas about what is achievable with modern technology? For this purpose, we need to explore how an intelligent entity interacts with reality. As easy as it seems, it is not a simple process.

Sealed in a dark chamber, no intelligence – whether natural or artificial – knows how the world exactly looks like. Instead, its experience of reality emerges from within, assisted by 'onboard' sensors. Using this input information, the intelligence itself constructs a map of the world. This is why all 8 billion of us have different maps of reality in our heads.

Consider the figure above to delve into this idea further.

Converters

Since AI is a digital entity, the captured information needs to be digitized into 1s and 0s. For electronics signals, this is done with the help of Analog to Digital Converters (ADC) and Digital to Analog Converters (DAC).

The real world is messy and not all phenomena can be broken down in the form of 1s and 0s. This restricts the applications where AI can play a role. So the expanse of the digital world determines how long the chains of AI can be.

Sensors

A human sensory system mainly consists of vision, hearing, touch, taste and smell. The stimuli from outside world are converted into signals that are sent to neocortex for signal processing to generate perception. As compared to humans, a machine can have more senses (e.g., to measure energy, force, torque, motion, position) and in extended ranges (e.g., vision beyond visible light, hearing beyond audible range) through cameras, LiDAR, radar, ultrasonic, accelerometers and gyroscopes to name a few.

Some of these stimuli are exceptionally well-suited to digitization from the analog world and reproduction back to analog form after processing. Examples are vision, hearing and text.

- Audio: a microphone acts as an ear and speaker as a larynx (voice box).

- Video: a camera acts like eyes and a screen or projector that shows the scene back.

Others like taste, touch and smell are ill-suited to digitization. If taste could have been digitized back and forth, we could have a Google in restaurant business that would serve customers all over the world. The figure below looks amazing until we realize that everything in the image is related to vision, hearing or text.

This is why the first two dreams of mine (video call and watching cricket highlights) were realized while teleportation did not. This is also why flagship AI applications are related to text, images, video, and music. Today ChatGPT and LLMs are household names while the main focus of many Generative AI startups is on natural language processing, audio recognition, music production and computer vision.

This front can be the biggest success for Generative AI. In the next few years, I expect a movie similar to Avatar entirely made by an AI from the script to the final form.

Joanna Maciejewska captures this idea nicely in her quote:

"I want AI to do my laundry and dishes so that I can do art and writing, not AI to do art and writing so that I can do laundry and dishes."

As it turns out, art and writing are easier for an intelligent machine than laundry and dishes. The reason is described next.

Actuators

An actuator takes an input signal from inside and transforms it into mechanical energy to accomplish a task outside. It is a crucial interface that enables an entity to force its will on the world. As humans, we can change something in our environment through arms and legs. For machines, this could be robotic arms, joints and axes in automated industrial production and any other moving parts.

Hal preparing to cut Frank’s air tube. Image Copyright: MGM

One factor which is severely downplayed in AI discussions is the impact of actuators. Ability to manipulate the environment is a crucial requirement for any intelligent entity. For some reason, after hearing the word intelligence, we automatically fill in the blanks, pull all the associated concepts together and take its ability to shape the environment as granted. This is not necessary. Imagine a superintelligent dolphin. There is a limit to the range of options available to the creature.

In humans, motor cortex impulses direct neurons in the spinal cord to contract muscles in order to initiate a movement. Our arms, legs and more importantly, thumbs and fingers, have played a great role in influencing the environment by enabling us to use many tools and improve on the outcomes of our experiments.

As compared to animals, robotic limbs consume far too much energy to replicate the same effect on a large scale. Unlike its electrical sibling, mechanical world is slow and hard. This is where AI can face a major handicap and this is an area where most AI substance can be differentiated from the hype. Lots of space between conceiving an idea and bringing it into the real world are filled by our own imaginations.

As an example, manufacturing even a simple pencil is a long and difficult process involving coordinated efforts from several teams from design to sourcing materials and production to testing. On the other hand, AI prophets talk about manufacturing slave robots (or anything it needs) by the bucketload through a single click. The pessimists are particularly prone to neglecting the underlying details. Consider the following quote from Nick Bostrom’s book Superintelligence.

Our demise may instead result from the habitat destruction that ensues when the AI begins massive global construction projects using nanotech factories and assemblers—construction projects which quickly, perhaps within days or weeks, tile all of the Earth’s surface with solar panels, nuclear reactors, supercomputing facilities with protruding cooling towers, space rocket launchers, or other installations whereby the AI intends to maximize the long-term cumulative realization of its values.

He talks about "… tile all of the Earth’s surface with solar panels, nuclear reactors, supercomputing facilities with protruding cooling towers, space rocket launchers,…" as if it is as easy as going to the supermarket and buying a toothpaste. The only thing more surprising than people actually writing such overstatements is the inclination of the general public to accept them.

What they miss is best described in the words of John Salvatier:

"Reality has a surprising amount of detail."

Moreover, even the makers tend to forget most of the details when the end product is beautiful.

The Adjacent Possible

If number crunching is a relatively ineffective way to intelligence, what are the alternatives? I do not know the answer but a hint is in the traditional experiment.

Imagine our world as a vast landscape of possibilities. At any given moment, there exists a set of changes that can be made. These are the feasible steps humankind can take based on the rules and constraints of their current reality. This is known as the adjacent possible, an idea devised by Stuart Kauffman and drawn in a figure shortly.

In words of Steven Johnson:

"The adjacent possible is a kind of shadow future, hovering on the edges of the present state of things, a map of all the ways in which the present can reinvent itself."

And it keeps growing with time from the existing state of the world. Many scientists of the past have utilized this route to create new inventions and opening up new possibilities for their descendants. But how does this work?

The key to learning is feedback. Organisms are feedback loops interacting with reality: the ones who (a) observe or measure, (b) learn or filter and (c) actuate or respond in a better manner are the ones who thrive (this is similar to how a Phase Locked Loop (PLL) works).

Similarly, a scientist can pursue one of the following two methods:

- One interacts with reality through conducting experiments in a laboratory or in nature. Few inventions are clear from start to finish; it is a gradual process. So from the present state of technology, the scientist explores the adjacent possible by tinkering here and there, observing and recording the consequences and constructing a mental picture of cause and effect to make testable predictions. Based on this feedback, they introduce some changes in the environment and run the process again.

As drawn in the above figure, this is a scientist who is more probable to come up with a $0\rightarrow 1$ invention or discovery (more on this below), as compared to the one discussed next.

- The second looks around and see most modern technologists running their science on number machines. This is also the scope of AI. It can sense the environment well. But if it can think more and do little, the distance between reality and thought increases at every next step.

For an AI, the actuator and association handicap translates into limited changes introduced in the environment, limited learning through cluttered feedback and hence incremental invention at best. Number crunching alone cannot help the AI enter the realm of unknown unknowns that happens with the exploration of the adjacent possible.

Now what are these $0\rightarrow 1$ and incremental inventions?

The Curious Case of Disappearing Inventions

In my opinion, there are two kinds of inventions.

- $0\rightarrow 1$ invention: This happens when the creation is a noticeable jump from the existing state of the world.

- Incremental invention: This is an improvement on what already exists, more like an innovation or evolution.

To elaborate on this classification further, I have drawn a timeline of some $0\rightarrow 1$ inventions below, all of which played a significant part in shaping the world we live in today. The yellow box represents the time frame for the development of a modern computer which does not have a single birth moment.

The most striking part of this figure is the large empty region (shown in red) in the past 50 years. After the microprocessor (1971), the only big jumps we have are the Internet and ChatGPT. Why is this region not populated?

To find an answer to this question, I asked none other than ChatGPT itself to list all the major inventions of the past 50 years. The list it produced includes cell phone (computer + wireless), GPS (satellite + wireless), USB drive, WiFi , WikiPedia, YouTube, electric car, self-driving car, solar panels, mp3 players, bitcoin, drone, virtual reality, Internet of Things and so on. Almost all of them are incremental advances on existing technology (there are some exceptions with bigger and bolder ventures; time will tell how they go). In other words, we have not been able to produce a major $0\rightarrow 1$ product in the past many decades!

But this does not make sense, even more so when we consider the following.

- We are living in the most technologically advanced time in history.

- We also have the largest number of professional scientists working today as compared to any other time in the past!

- Not only that, these heroes command the same prestige as the knights of old days, hence attracting the best and the brightest talent.

- It is hard to believe that all the low hanging fruit has been picked up by scientists of 18th, 19th and first half of 20th century, while the top scientists of the current generations are only left with incremental improvements.

To find an answer, let us explore the road that has brought us to AI.

Silicon Valley’s Ultimate Bet

If we look at the predictions from science fiction stories and movies of the last century, we come upon teleportation, immortality, glass cities, an army of robot workers, jetpacks, cars flying in sky highways, invisibility cloak and time travel. In fact, after the first moon landing in 1969, the company Pan Am started taking advance bookings for round-trip travel between earth and the moon. However, none of the above ever came to fruition.

Time travel, the most elusive of all human ambitions. Image Copyright: Universal Pictures

It was mainly the problems related to computing and communication that got solved. But the progress is slow outside that realm on (much more) important fronts such as climate change, water shortages and most biological fronts (e.g., a cure for Alzheimer’s disease or fixing a limb/organ). And these are relatively easier issues as compared to future predictions mentioned above such as immortality, teleportation or intergalactic travel.

Peter Thiel described it best when he said:

"We wanted flying cars. Instead we got 140 characters," referring to Twitter.

So what was going on in the technology world during all this time?

The Age of One-Trick Pony

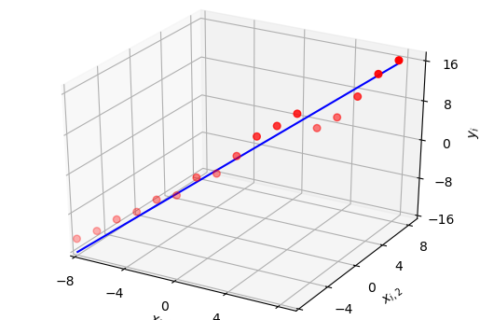

Transistor is the wheel of modern civilization. Gordon Moore, the co-founder of Fairchild Semiconductor and Intel, predicted in 1965 that the number of transistors in a dense integrated circuit doubles about every 18 to 24 months. Just like an organism adapted to the environment grows exponentially from 2 to 4, 8, 16, … and populates the whole ecosystem in favorable conditions, Moore’s law implies that the transistor count on the same chip area keeps doubling and an exponentially large number of them fit in the same die.

This scaling down of the transistor size has spawned revolutionary growth in the world of computing, electronics and society in general in the last 60 years. In addition to long-term microprocessor trend, it has influenced the sizes of memory, sensors, pixels in digital cameras but most importantly the computational complexity of AI algorithms.

The figure below shows how an exponential growth looks like.

At the start, there is not much to show for your effort. Then, there is a phenomenal rise … of everything. In this context, we are talking about the number of transistors, the power of the machine, the problems it can solve, its widespread proliferation and consequently profits generated by the companies. And this is what everyone loves.

As a consequence, the technology overwhelmingly evolved in one particular direction, the direction of computing, and so does the trend of solving every problem through mathematics and coding.

According to the inventor of Kalman filter, Rudolf Kalman:

"Once you get the physics right, the rest is mathematics."

With the rise of machine learning and AI, this can be modified it as follows.

"Once you get the mathematics right, the rest is coding."

This strategy is the familiar Maslow’s hammer in hindsight.

"If all you have is a hammer, everything looks like a nail."

But that also makes us a one-trick pony. We are at a stage that when we say technology, we mean a computing device within a product! Moreover, due to the impact of Moore’s law, people have started thinking that all technology moves at a similar pace and the trend will continue forever.

The question is, what favors computing industry among all the available options for investment of time and capital?

The Speed of Money

In capitalism and free market economy, there is a tendency for winning products to be the ones with the largest reward and the least amount of resources, not necessarily the most important ones for humankind or even for a nation. This is similar to the path of least resistance taken by the electric current.

And this makes sense too. No investor can be blamed for a desire to make money in a harsh world where probably 90% of all projects fail. Bigger ventures attempting to solve real-world challenges have an even higher probability of failure. Since software is producing new millionaires and billionaires every year, why look somewhere else?

In words of Steve Blank:

"If investors have a choice of investing in a blockbuster cancer drug that will pay them nothing for fifteen years or a social media application that can go big in a few years, which do you think they’re going to pick? If you’re a VC firm, you’re phasing out your life science division.

$\vdots$

Compared to iOS/Android apps, all that other stuff is hard and the returns take forever."

The problem is that just like the oil wells in the world are going to become dry one day, the silicon well too is going to become dry soon.

Before that happens, AI is the Silicon Valley’s largest bet. I think that this is an interesting finale. If AI falls short of the hype – a likely scenario, it will create a whole paradigm shift and scientists will have to confront much bigger challenges.

However, there is a way in which that moment can be further delayed.

The Inconvenient Truth Behind AI

The slope of innovation tends to get steeper as you ascend. This has also happened with progress in AI.

The 3rd Pillar

Since the early days, humans have always had three resources available for progress.

- Raw materials

- Energy

- Knowledge

This is drawn in the figure below.

Initially, the traditional focus was on increasing the raw materials and energy resources while the 3rd dimension – gaining more knowledge – was not even on the radars. With the scientific revolution came the value of knowledge.

As an example, British economist Thomas Malthus predicted that populations will continue to rise until they outgrew the food supply. This would cause a tragic decline in population due to famine and conflicts over limited food available. However, advances in knowledge of farming techniques during industrial revolution resulted in far greater food production than anyone would have ever dreamt of. Consequently, his fears never materialized and the case became a textbook example of knowledge application.

Unfortunately, the trend in technology in general and AI in particular has gone in the opposite direction, see the arrow in the figure above. As innovation becomes harder, engineers have started throwing more and more resources on the problem at hand in terms of raw materials and energy. They know that a common person cannot differentiate between a product that comes out from one of the following two processes.

- Big innovation + limited resources

- Little innovation + lots of resources

This is illustrated in the figure below where both products look similar, although there is a 25% and 75% switch between them in terms of resources and ingenuity.

As a consequence, a little jump in innovation and a much higher jump in resources can be presented as a highly innovative technology! This 'more' can easily hide the lack of ingenuity from a non-technical person.

For example, in my own field – wireless communications – there have been few advances in the past 20 years, despite the fact that IEEE articles published during this time can fill whole rooms in printed form. Then the question is how the wireless companies are sustaining 100x or 1000x data rates. The answer, you guessed it, is the huge amount of resources thrown into achieving this goal. In particular, 5G wireless is driven by a massive number of antennas (massive MIMO) and huge bandwidths (in mmWave band) as the main driving forces. No customer can tell the difference between a signal arriving at their phone from an 8-antenna base station and a 512-antenna base station or whether their phone is using 10 MHz bandwidth or 80 MHz bandwidth. And 6G is set to consume even more power, hardware and bandwidth.

Recent advances in AI are no different. After the Transformer breakthrough in 2017, it is the power of resources that is advancing the cause. It takes 1000s of GPUs and electricity consumption equal to that of small towns to train a language model, in addition to consuming practically everything that is out there on the web. For example, according to OpenAI, it took them 100 days of running 25000 NVIDIA A100 GPUs at a cost of $100 million to train GPT-4.

This 'more' is being marketed to the masses as a growth in intelligence. A non-technical person gets an impression that machines in the labs of Silicon Valley are running algorithms that are making them smarter every day in an infinite loop, like a teenager during a growth spurt. This is an example of exaggeration used in AI circles.

AI and Life of Pi

What we lack in intelligence, we make up for in hyperbole. According to a recent news, Sam Altman (head of OpenAI) pitched a USD 7 trillion proposal to TMSC and UAE for further AI development. To put things in perspective, this amount is more than what the richest country in the world, the United States, spent in FY 2023!

A common theme in AI circles is the use of extravagant language like singularity, breakneck speed, breathtaking developments, dramatic improvements, and so on. I am sure that the reader has either sat in the presentations or watched on YouTube how 100 years worth of innovations will be accomplished in the next 4 years (a variant of the curve shown below) because – and this is interesting – extrapolating the inventions timeline tells us so. This is commonly known as Law of Accelerating Returns proposed by Ray Kurzweil [2], based on which he prophesized that singularity is near.

I quote below from a viral article AI Revolution written in 2015 by Tim Urban [3].

Kurzweil suggests that the progress of the entire 20th century would have been achieved in only 20 years at the rate of advancement in the year 2000-in other words, by 2000, the rate of progress was five times faster than the average rate of progress during the 20th century. He believes another 20th century’s worth of progress happened between 2000 and 2014 and that another 20th century’s worth of progress will happen by 2021, in only seven years. A couple decades later, he believes a 20th century’s worth of progress will happen multiple times in the same year, and even later, in less than one month. All in all, because of the Law of Accelerating Returns, Kurzweil believes that the 21st century will achieve 1,000 times the progress of the 20th century.

According to Kurzweil, Urban and many other folks telling similar tales, we should have had a whole 20’th century’s worth of progress from 2014 to 2021!

Now look around yourself and tell me what has reasonably changed in your life during those 7 years? For me, hardly anything after iPhone in 2007 but even if there are a few hits I have missed, do you really see an equivalent of a long list of inventions from 1900-1999 appearing in 2014-2021?

When every scientist knows that very few lab successes translate into real commercial products, why do we say and consume such hyperbole? Maybe because most of us are quite optimistic about technology or to some extent, some investors in AI startups benefit from creating an AI hype in the media.

But a better answer, in my opinion, comes from the novel Life of Pi written by Yann Martel. The conversation between Pi and the officials from Japanese Ministry of Transport (conducting an inquiry into the shipwreck) goes as follows.

"So tell me, …, which story do you prefer? Which is the better story, the story with animals or the story without animals?"

…

Mr. Chiba: "The story with animals."

…

Pi Patel: "Thank you. And so it goes with God."

So when it comes to technology and human civilization, ask yourself: Which is the better story, the story with AI or the story without AI?

For most of the technologists and futurists, the preferred story is that of an AI God.

Instead of gradually solving harder and harder problems ourselves, how thrilling it is to boot an AI God that can then work out everything for us, as illustrated in the figure below. Observe how naturally AI fits in between the hard problems and really hard problems.

This story sounds so good that we cannot help but believe in it. On that note, who can blame Tim Urban on reinforcing this idea in the same article [3].

If our meager brains were able to invent wifi, then something 100 or 1,000 or 1 billion times smarter than we are should have no problem controlling the positioning of each and every atom in the world in any way it likes, at any time—everything we consider magic, every power we imagine a supreme God to have will be as mundane an activity for the ASI as flipping on a light switch is for us. Creating the technology to reverse human aging, curing disease and hunger and even mortality, reprogramming the weather to protect the future of life on Earth—all suddenly possible.

The danger here, as ancient civilizations learned the hard way, is that reality has little regard for our beliefs.

Concluding Remarks

In summary, electricity is our most potent weapon among the fundamental forces with practically unlimited applications (shown as dots in the figure below). One of them, a microprocessor, teams up with mathematics, a treasured item in our mental toolbox, to give rise to the digital age that reaches its peak in the form of AI (the term $X$ in this figure represents all our conceptual tools excluding mathematics).

Just like the Pied Piper led the children of Hamelin to a cave, Moore’s law has led the technology world to this narrow pipe shown above. For the past 60 years, our civilization has had a good run during which computing+code has been the magic potion for solving every problem.

But no infinity lasts forever. Now the Silicon Valley has placed its ultimate bet on AI and the future of the world depends on the outcome.

I believe that it will never attain true human-like intelligence. In the article, I mentioned intelligence being an upstream process that poses great questions and has the ability to explore a cause-and-effect trail. Furthermore, my hunch is that intelligence is not a simple neocortex of our brains. Instead, it has deep roots in the survival instincts of the old brain. Core attributes of intelligence are embedded not in computations but in life itself. In the quest for a real AI, we blur the boundaries between intelligence and life, filling in lots of blanks ourselves.

However, AI will prove to be a useful invention. While the odds of it annihilating us or solving all our problems are not high (except maybe through climate costs from reckless overuse of AI), the frightening part about adopting a computational route is that any new learning can be quickly replicated into millions of machines in less than a second. That kind of network scale opens up new possibilities even with little innovations. So discounting the Artificial General Intelligence (AGI) possibility, my guess is that AI will turn out to be beneficial/harmful in entirely surprising ways as compared to Sci-Fi predictions so far.

The bright side is that at the end of AI hype, we will to have confront serious problems like global warming, sustainable development and biotechnology that can only be solved through doing real science, not writing code.

This means that the world needs more Nikola Teslas. Instead of taking over the world, AI can take over the coding realm.

References

[1] Wigner, E. P. (1960). "The unreasonable effectiveness of mathematics in the natural sciences." Communications on Pure and Applied Mathematics. 13 (1): 1–14.

[2] Kurzweil, R. (2004). The Law of Accelerating Returns. In: Teuscher, C. (eds) Alan Turing: Life and Legacy of a Great Thinker. Springer, Berlin, Heidelberg.

[3] Urban T., https://waitbutwhy.com/2015/01/artificial-intelligence-revolution-1.html

Wonderful article, Qasim.

Agree on all key conclusions.

We have managed to create something that has passed the turing test by spending billions of dollars, but we are not likely to build the actuators/AI that will differentiate between a flower and a plastic wrapper from the side of the road and pick it up.

Problem is, as you quoted, the capitalism will only care about minimal energy path of maximum return and the inventions to replace the call center operators with chatbots are likely to get more funding than solving Alzheimers or solving world’s garbage or plumbing problems.

Even if AI starts observing itself and learn from itself, (pseudo-consciousness), the capitalistic push to build an organic intelligence will be very little. We dont need to be scared of AI, we are scary enough( to destroy ourselves).

Yes, it feels that we take the path of least resistance in our quests, without considering in detail where the road will take us in the end.

Kudos, my hat is off to you. This piece is the most logical, honest, and real assessment of science and technology I have read to date. In tackling artificial “intelligence”, you so deftly encompassed the entire advancement of the human species, and applied a long needed damping to what has become the religion of mathematics. Useful tools that should never substitute experimental observations into the physics that govern reality. Cheers.

That’s right. Thank you.

Thank you, Qasim for your articles and books.

There is nothing new under the Moon.

When nuclear weapon was invented people also built more and more powerful bombs

exploring the new possibilities.

It looks lile AI is in the phase of quantitative development.