Thomas Bayes was an English statistician and Presbyterian minister who came up with this theorem in 18th century during his investigation on how to update the understanding of a phenomenon as more evidence becomes available. At that time, he did not deem it worthy of publication and never submitted it to any journal. It was discovered in his notes after his death and published by his friend Richard Price.

In the past, Bayesian theorem was associated with highly complicated mathematics (and rightly so), and hence it was generally a topic of interest for mathematicians, statisticians and similar professionals. However, as we enter the data age at the turn of the century, the impact of Bayesian statistics now touches everyday decisions and actions that need to be taken by most of the humans on the planet. This impact not only ranges from ordinary matters such as what we buy to what we watch but also from life defining choices such as whom to marry to where to settle. Machine learning and artificial intelligence are now seamlessly integrated into our lives, thanks to an extensive use of the Bayesian methodology.

With this background, this article serves as a beginner’s guide to Bayesian inference. Although the example used is a wireless communication problem, the general idea can be applied to any other field. Feel free to skip the wireless terminology used to introduce the system setup in the next few lines.

Signal in Noise Problem

In digital communication, a modulation symbol $s$ is sent over the air through a wireless channel $h$. This symbol can take a set of discrete values such as +1 or -1 in the binary case. The received signal $r$ is given by

\[

r = s\cdot h + \text{noise}

\]

This is the model mostly followed in most infrastructure based wireless systems using multicarrier modulation such as 5G and WiFi. A simple block diagram of such a setup is drawn in the figure below.

In the absence of channel fading $h$ (e.g., simple AWGN channels or massive MIMO with ideal channel hardening), the relation between the observed channel outputs $r$ and symbols $s$ becomes

\[

r = s + \text{noise}

\]

With received data $r$ available, our goal is to detect the symbol $s$ sent from the transmitter. There are two approaches that can be explored to solve this problem.

Maximum Likelihood

In this case, we simply focus on the probability of the observation, given that it originated from a certain candidate. Mathematically, this can be written as

\begin{equation}\label{equation-ml-relation}

\text{Compute for each candidate: } p(\text{observation … given … the candidate})

\end{equation}

Then, we can compare all possible candidates for $s$ (e.g., +1 and -1 for binary scenario) and choose the one with the highest such probability. This is known as a maximum likelihood approach.

In the context of signal in noise problem, we apply this principle by computing the probability of an observation $r$, given that the sent symbol $s$ was $-1$ or $+1$ as follows.

- Let the probability of the observation $r$, given the sent symbol $s$ was $-1$, be $p (r ~\text{given}~ s=-1)$.

- Let the probability of the observation $r$, given the sent symbol $s$ was $+1$, be $p (r ~\text{given}~ s=+1)$.

Now a decision can be made by choosing the maximum of these two probabilities. In general, there is less ambiguity regarding very large negative or very large positive values. The former decode back to symbols $-1$ and the latter to $+1$ with a high probability (or bits 1 and 0, respectively). However, this approach fails us when the observations $r$ have a small magnitude, the scenario where most frequent errors occur.

Bayesian Approach

A better approach then is to compute the probability of a given candidate given the new observations. Reversing Eq (\ref{equation-ml-relation}), we get

\begin{equation}\label{equation-bayesian-relation}

\text{Compute for each candidate: } p(\text{candidate … given … the observation})

\end{equation}

That turns the first approach around and places things in a natural order because the question is posed as updating our belief about a possibility after the availability of information. This approach brings us to the celebrated Bayes’ Theorem (to put things into context, historically there have been heated arguments between what the statisticians call the frequentist and Bayesian camps).

To understand the Bayesian approach, let us take a well known puzzle described by Daniel Kahneman in his book "Thinking Fast and Slow".

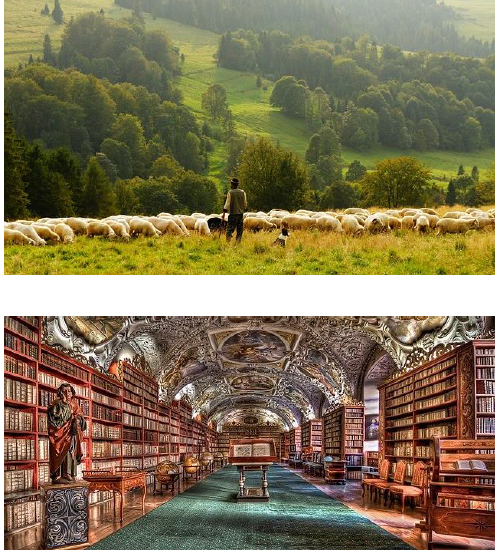

An individual has been described by a neighbor as follows: "Steve is very shy and withdrawn, invariably helpful but with little interest in people or in the world of reality. A meek and tidy soul, he has a need for order and structure, and a passion for detail." Is Steve more likely to be a librarian or a farmer?

According to Kahneman, most people assume Steve is a librarian, thus illustrating the inability of our minds to think in probabilistic terms. "Did it occur to you that there are more than 20 male farmers for each male librarian in the United States? Because there are so many more farmers, it is almost certain that more `meek and tidy’ souls will be found on tractors than at library information desks." In other words, we hardly take into account the prior probability in real world problems!

The prior probability of an uncertain quantity expresses one’s belief about it before some evidence shows up. Then, we fuse this prior probability with the likelihood in the light of new information and update our belief. Mathematically, we can write this update process as

\begin{aligned}

p\Big(\text{candidate … given … the observation}\Big) ~\propto~ \hspace{2in} \\ \underbrace{p\Big(\text{candidate}\Big)}_{\text{prior belief}}\cdot \underbrace{p\Big(\text{observation … given … the candidate}\Big)}_{\text{likelihood of that candidate}}

\end{aligned}

\end{equation}\label{equation-posterior-prob}$$

where a proportional sign instead of an equality indicates the absence of a normalizing constant. In the context of the above puzzle, we can write

\[

\begin{aligned}

p \Big(\text{librarian … given … a meek and tidy soul}\Big) \propto \hspace{2in} \\ \underbrace{p\Big(\text{librarian}\Big)}_{\text{prior probability}}\cdot \underbrace{p \Big( \text{a meek and tidy soul … given … librarian}\Big)}_{\text{likelihood of that candidate}}

\end{aligned}

\]

Even when the likelihood of a meek and tidy soul being a librarian is high, the prior probability $p(\text{librarian})$ is very low as compared to $p(\text{farmer})$ in the United States (around $1:20$). Consequently, the product of the above two probabilities on the right hand side is relatively small. This clarifies the confusion in correctly answering this puzzle.

We now see how this philosophy can be applied to decoding a communication signal in noise.

The Log Likelihood Ratios (LLR)

In the context of signal in noise problem, we decide whether each bit is a 0 or a 1 after the observations are presented to us.

- Let the probability of $s=-1$, given the observation $r$, be $p ( s=-1 ~\text{given}~ r)$.

- Let the probability of $s=+1$, given the observation $r$, be $p ( s=+1 ~\text{given}~ r)$.

Here, prior probabilities appear in the form of $p(s=+1)$ and $p(s=-1)$. We decide in favor of the larger probability after the update, e.g., if $p ( s=+1 ~\text{given}~ r)$ is greater than $p ( s=-1 ~\text{given}~ r)$, then $s=+1$ is a better candidate for symbol decision.

\[

p ( s=+1 ~\text{given}~ r) ~~\ge~~ p ( s=-1 ~\text{given}~ r) \hspace{0.5in} \Rightarrow \hspace{0.5in} \frac{p ( s=+1 ~\text{given}~ r)}{p ( s=-1 ~\text{given}~ r)} \quad \ge \quad 1

\]

Taking logarithms on both sides, we get

\begin{equation}\label{equation-aposterior}

\underbrace{\log \left\{\frac{p ( s=+1 ~\text{given}~ r)}{p ( s=-1 ~\text{given}~ r)}\right\}}_{L(s ~…~ \text{given} ~… ~r)} \quad \ge \quad 0

\end{equation}

This expression is known as the Log Likelihood Ratio (LLR). We decide in favor of $s=+1$ if the above LLR is positive and vice versa. Applying Eq (\ref{equation-posterior-prob}) to this term, we get

\[

\begin{aligned}

L(s ~…~ \text{given} ~… ~r) =& \log \left\{\frac{p ( s=+1 ~\text{given}~ r)}{p ( s=-1 ~\text{given}~ r)}\right\} \\ =& \log \Bigg\{\frac{p ( r ~\text{given}~ s=+1)\cdot p(s=+1)}{p ( r ~\text{given}~ s=-1)\cdot p(s=-1)}\Bigg\}

\end{aligned}

\]

Using the property $\log \left(A\cdot B\right)$ $=$ $\log A$ $+$ $\log B$, we have

\begin{equation}\label{equation-llrs}

L(s ~…~ \text{given} ~… ~r) = \underbrace{\log \Bigg\{\frac{p ( r ~\text{given}~ s=+1)}{p ( r ~\text{given}~ s=-1)}\Bigg\}}_{\text{Channel reliability}} \quad + \quad\underbrace{\log \Bigg\{\frac{p(s=+1)}{p(s=-1)}\Bigg\}}_{\text{Prior probability}}

\end{equation}

The first term in the right hand expression depends on the ratio of likelihoods discussed before which is known as channel reliability in communications jargon. The second term depends on the ratio of a priori probabilities of the possible candidates. These are obtained through using two, not one, decoders that exchange information between each other over a number of iterations. This iterative decoding structure yields large coding gains that propels the system performance closer to the Shannon limit.

If you enjoyed reading this article, you might also like the reason why the Monty Hall problem is so perplexing and AI – An advanced civilization or a one-trick pony.