Machine learning is probably the defining technology of the past decade. As with all walks of life, it is playing an increasingly significant role in existing and future wireless networks. In this article, we explore the big picture of this exciting field.

Nature vs Man

Humans have always been interested in the workings of a mind, replicated by machines in many science fiction stories. During their investigations on Artificial Intelligence (AI, of which machine learning is a subset), many scientists observed that the machines need not copy the exact brain but a functional level performance is good enough that can open the doors to eventually surpassing the brain through more advanced computers.

This is because nature does not always evolve towards the best solutions.

The justification comes from our history. We made cars faster than cheetahs not by designing four-legged vehicles but by inventing a wheel. We can fly hundreds of people in an aeroplane at the same time not by imitating birds but by understanding and utilizing the underlying principles of mechanics and aerodynamics.

In the same spirit, we hope that modern machine learning techniques can do better than human brains while staying on the current road to progress. This is why most of the machine learning literature focuses on mathematical algorithms and techniques that can be implemented on fast computers.

Machines = Computing Machines

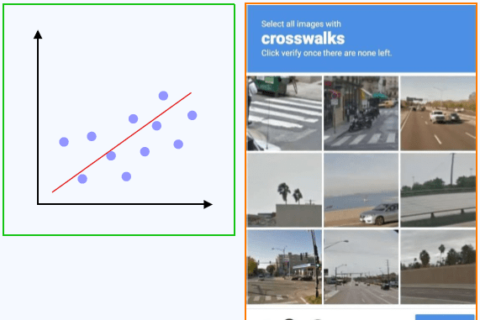

So what exactly is machine learning? The simple definition by Tom Mitchell is as follows.

A computer program is said to learn from data if its performance on assigned tasks improves with experience when measured according to some performance criteria.

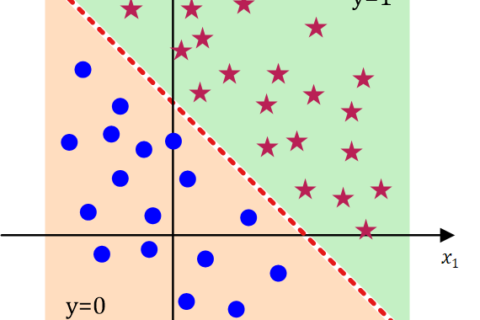

Here, I should mention the broad classifications of algorithms like supervised, unsupervised, semi-supervised and reinforcement learning. And dozens of different types of machine learning algorithms that are used in practice. But I leave their details for future articles. Instead, today I will focus on what machine learning is not.

Moore’s Law

Let us begin with Moore’s law. Gordon Moore, the co-founder of Fairchild Semiconductor and Intel, predicted in 1965 that the number of transistors in a dense integrated circuit doubles about every two years. This scaling down of the transistor size has spawned revolutionary growth in the world of computing, electronics and society in general in the last 50 to 60 years that set the stage for the dawn of machine learning.

As a consequence, the technology is overwhelmingly evolving in one particular direction, the direction of computing. By virtue of Moore’s law, the number of transistors (and now cores) are growing, and so does the trend of solving every problem through mathematics and coding.

According to the inventor of Kalman filter, Rudolf Kalman,

"Once you get the physics right, the rest is mathematics."

We can expand it as follows.

"Once you get the mathematics right, the rest is coding."

Why does this phenomenon form the bedrock of all the recent progress?

A Hammer in Your Hand

To answer this question, we have to understand what exponential growth looks like.

At the start, there is not much to show for your effort. Then, there is a phenomenal rise … of everything. In this context,

- of the number of transistors …

- of the power of the machine …

- of the problems it can solve …

- in its widespread proliferation …

- of the company valuation …

- of the revenue

and this is what everyone loves. Few people in Silicon Valley and beyond can afford taking some other slow and painful road in alternative directions when a strategy is producing several new billionaires every year.

This working strategy is the familiar Maslow’s hammer in hindsight.

"If all you have is a hammer, everything looks like a nail."

On a side note, this also reveals the power of focus. When all of mankind sets their eyes on implementing mathematical equations on computers, we get tactile internet and virtual reality.

From Algorithms to Unknowns

But that also makes us a one-trick pony.

In other words, if we subtract the effect of Moore’s law from the past half a century, humankind has relatively little to show for its progress. This is the cost we pay for that exponential growth.

If we look at the predictions from science fiction stories and movies of the last century, machine learning and artificial intelligence algorithms are solving the problems related to computing and communication, e.g., video calls, drones, biometrics and augmented reality.

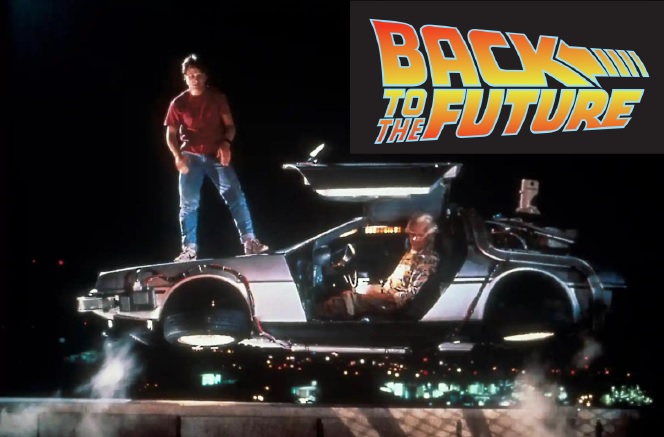

But the progress is slow outside that realm due to the opportunity cost of (much more) important fronts such as climate change, water shortages, food affordability and most biological fronts (e.g., a cure for cancer or fixing a limb/organ). And these are relatively easier issues as compared to the predicted intergalactic travel, increasing the human lifespan or time travel.

Perhaps we have taken the learning computers in a similar vein as the pre-20th century horses. We think we need more of them and faster. But what we might actually need is a new Henry Ford to give us cars, i.e., something completely unknown at this point of time.

Exploring the unknown unknowns by definition cannot involve learning computers. And there are reasons to be optimistic. The moon mission in 1969 succeeded despite Apollo Guidance Computer having a performance inferior to today’s calculators in many aspects.